Imagine standing in the middle of a forest. How far into the forest do you think you could hear? 200 metres? A kilometre? Perk up your ears. Could you count the number of birds calling in that forest? In other words, could you identify every bird that is within earshot? Believe it or not, while this method is difficult and imprecise, it’s one of the most common techniques to monitor bird populations around the world, and researchers are always looking for ways to improve.

The task of counting individual birds, amphibians, and other vocalizing animals is quite challenging. Fundamental questions like how far your ear—or recording device of your choice— can “hear” are crucial to address. It’s precisely these types of questions that University of Alberta graduate student, Dan Yip, is looking to answer. But instead of evaluating human hearing, he’s working with the latest acoustic technology, automatic recording units (ARUs), that are becoming the tool of choice in this field.

Yip is part of the Acoustic Monitoring Group (AMG), a collaboration between the Alberta Biodiversity Monitoring Institute (ABMI) and the lab of Dr. Erin Bayne, UofA Professor of Biological Sciences. Dr. Bayne’s research focuses on understanding the influences of human activity on species habitat selection in the boreal forest, including the responses of boreal forest birds to energy sector development. It’s a great complement to ABMI’s province-wide system for monitoring biodiversity, including birds. Yip’s objective is to extract more information from recorded birds, to get a better understanding of which species are found in different parts of Alberta’s boreal forest and, for some species, an estimate of their population density.

Working in the Lower Athabasca Region (a 93 thousand square kilometer area in northeast Alberta), Yip and the rest of the AMG utilize ARUs to monitor vocalizing animals in the region. The benefits of using these recorders are profound; for example, field crews recorded almost 20 terabytes of sound data in 2013 alone – that’s over 2.5 years of continuous sound files!

As a result, ecologists now have unprecedented access to enormous amounts of high-quality data on many species of birds, amphibians, and other wildlife. ARUs increase accuracy and encourage collaboration, as problematic calls can be discussed and debated amongst interpreters or even automatically identified by innovative software. “You can census just about anything vocal with these recorders,” enthuses Yip. “And, when compared to relying on human technicians in the field, the use of ARUs avoids the need for repeated site visits, decreases bias and helps to alleviate the stress of needing to accurately recognize calls on the spot.”

A major limitation of ARUs, however, is the inability (at present) to measure how far away from the ARU the sound originated. Knowing distance from the sound source to the observer (in this case, the ARU) is essential for turning counts of animals recorded by sound into a value of species density, or the number of species per unit area. Currently, without this distance information, the data collected by ARUs can provide count information on the number and type of vocalizing individuals, which allows researchers to compare the relative abundance of species between locations, but not the species density.

However, to evaluate how bird populations are changing over time and to make effective management decisions, we need to know how many animals are present in very large regions. Thus, Yip’s research is focused on how to convert relative abundance data into species density: “The question is, how do we convert raw bird call counts into some sort of inference about the population of that bird for a given area?” asks Yip. “For example, if I hear four rusty blackbirds on a recording, what does that actually mean in terms of blackbird density in the area?”

So, what approach is Yip and crew using to estimate the maximum distance sound can travel and still be recorded by the ARU? The simple explanation is that field crewmembers play back a bird call at varying distances from the ARU until they reach a distance where the call is no longer “heard” or recorded by the ARU. But, complicating this task is the fact that Yip needs to account for how far sound travels in different types of forests, in different weather conditions, and across different landscapes.

Daniel Yip and field assistant Natasha Annich listen to playback of ARU recordings (Photo: Josué Arteaga).

“There are an infinite number of things that can affect what you’re hearing—the type of habitat, even whether the bird is facing the recorder or not—so I’m attempting to build a model for bioacoustic data that accounts for the most important of these factors,” describes Yip. “Essentially, I’m trying to develop the correction factors that will allow us to convert the raw count recorder data into estimates of species density.”

One of the most important factors Yip has to account for is habitat type. To quantitatively evaluate the effect of habitat on sound transmission, Yip is sampling how far bird calls can travel in forested wetlands (bogs and fens), uplands (coniferous and deciduous forests), and along roads through the forest. “In each of these locations,” says Yip, “I’ll be trying to determine what area of a forest you can cover with these recorders.

Similarly, temperature and other weather conditions, like rain, wind, and humidity, can affect how far sound travels, so Yip is collecting detailed weather information to add to his estimations.

Furthermore, Yip needs to account for the fact that the birds themselves sing at different frequencies and thus their calls travel different distances. Owls, for example, call at much lower frequencies and can be heard from up to a kilometre away, whereas songbirds sing at much higher frequencies and can be heard from only 300 or 400 metres away.

ARUs have often been used to determine whether a species is present or absent, however Yip’s work will help us to understand the population status of a species in an area. And given the volume of data being collected by ARUs, Yip’s work could prove highly useful. “So far there isn’t an accurate way to estimate [density using acoustic data], so if I’m successful, we can apply this model to all of the raw counts, which should lead to much better understanding of the ecology of individual species, as well as communities.”

Another major benefit of Yip’s graduate research will be the ability to compare detection distances of different types of recorders. In addition to ARUs, researchers also use river forks, song meters, and zoom recorders to record vocalizing species. Once complete, Yip’s work will permit researchers to “use any type of recorder, in any type of habitat, and correct for that variation, standardize the recording, and get a density estimation.”

The Acoustic Monitoring Group is already well on its way toward accomplishing these goals, with a busy 2013 field season and an even busier 2014 field season already underway. They deployed 675 ARUs at 133 sites between March and August of 2013, which allowed Yip and his group to positively identify sites with rare and elusive bird and amphibian species (including 60 stations with yellow rail detections, 24 stations with barred owl detections, and 11 stations with Canadian toads present), as well as over 170 other species. The 2014 field season is deploying additional ARUs at new sites, as well as continuing to monitor at the previous locations – enough to keep Yip busy for some time!

Learn more about the acoustic ecology work of Dr. Erin Bayne’s lab at the University of Alberta here!

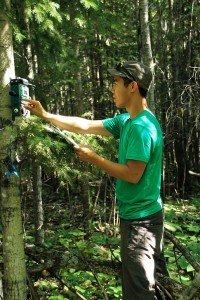

Top Photo: Ricky Kong, Josué Arteaga (Photo: Daniel Yip).